Return to robot index ↑

Return to robot index ↑

Last updated April 1, 2018.

I was once a young boy endlessly fascinated by what I called digger-dumpers. I've become a young man trying to automate the world. Along the way I've built a lot of robots, some of which deserve the name, and some that probably don't. Building the stuff below has given me some of the greatest joy I've ever had in my life. This page exists to ensure that I don't forget.

In reverse order of project inception:

After college: New project.

College: 1701 Rover, Armstrong (Self Driving Segway), and The Flying Bulldogs Drone Swarm.

Late High School: Drobio Quadcopter, Gasp Rover, MaRv (Miniature Rover), and RHoDeS (Hovercraft Base).

World Robot Olympiad Robots: Newton (Elder Support Robot), and DaRWIn (Dancing Robot).

Early high school: Arduino BTCar, Soccerbots, Qenduro (Amphibious RC Vehicle), Tricksbot09 (Autonomous Maze Navigator), and the Millennium Plus ROV (Submarine).

Early stuff: Vertigo (Competition Robot), Prison Break (Wall Vaulter), MindWarp (Voice Controlled Robot), Qbots (Miniature Water Polo), and Dusty (Cleaning Robot).

With thanks to my family, Hanish Mehta, Sharmaji, Shiva, Han Zhang, Christopher Leet, Ethan Weinberger, Qingyang 'Q' Chen, Yash Savani, Rushabh Mashru, Alex Carrillo, Kevin Abbott, Alfred Tuotol Delle, Sachith Gullapalli, Philip Piper, Karan Gupta, Hiral Sanghvi, Anton Xue, Doron Rose, Gerardo Carranza, Betsy Li, and the countless others who've built things with me, given me sage advice, and helped me along the way.

Something's cooking.

Return to robot index ↑

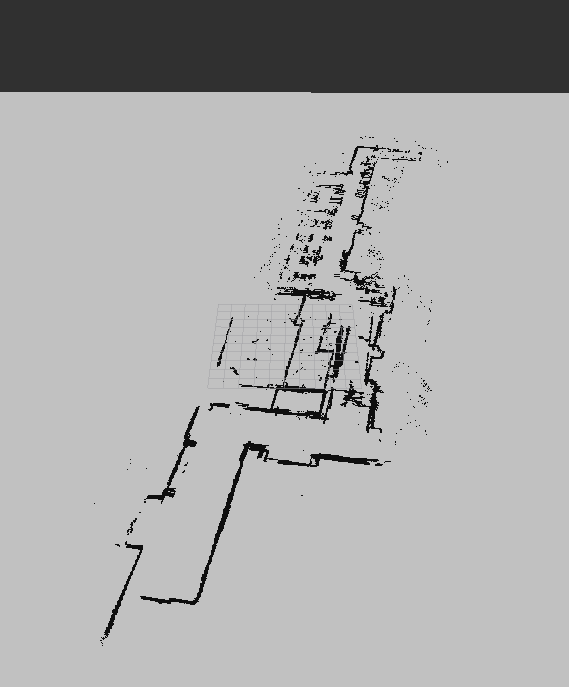

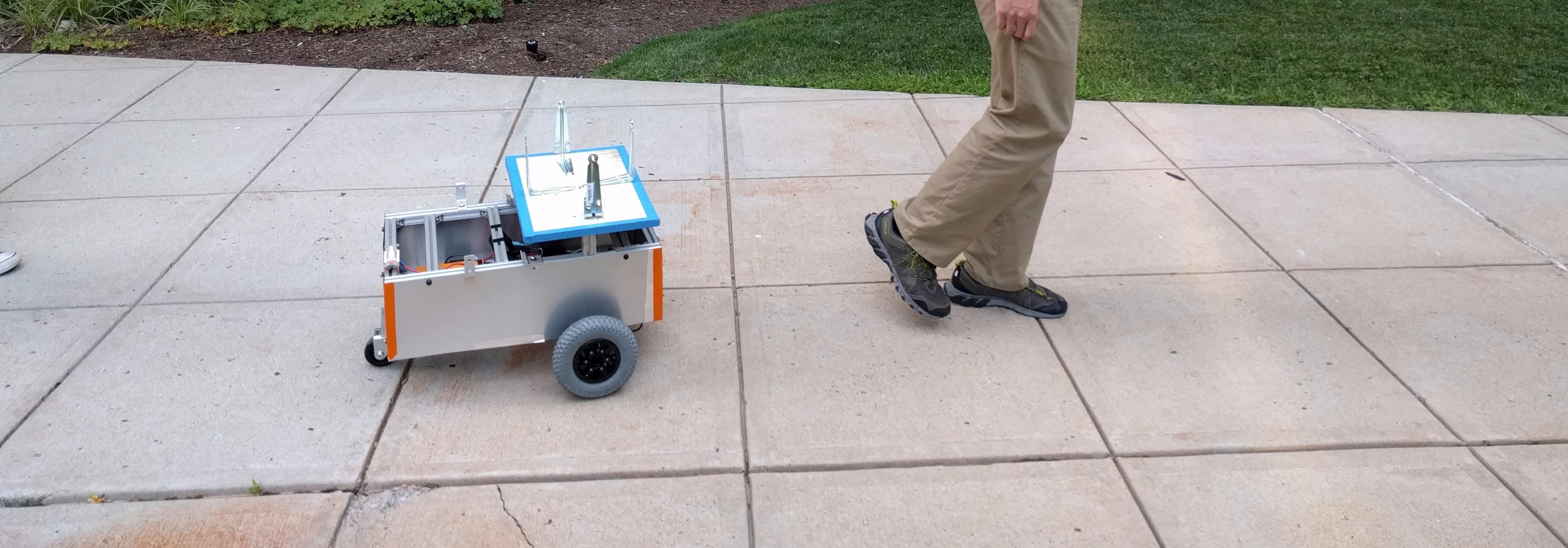

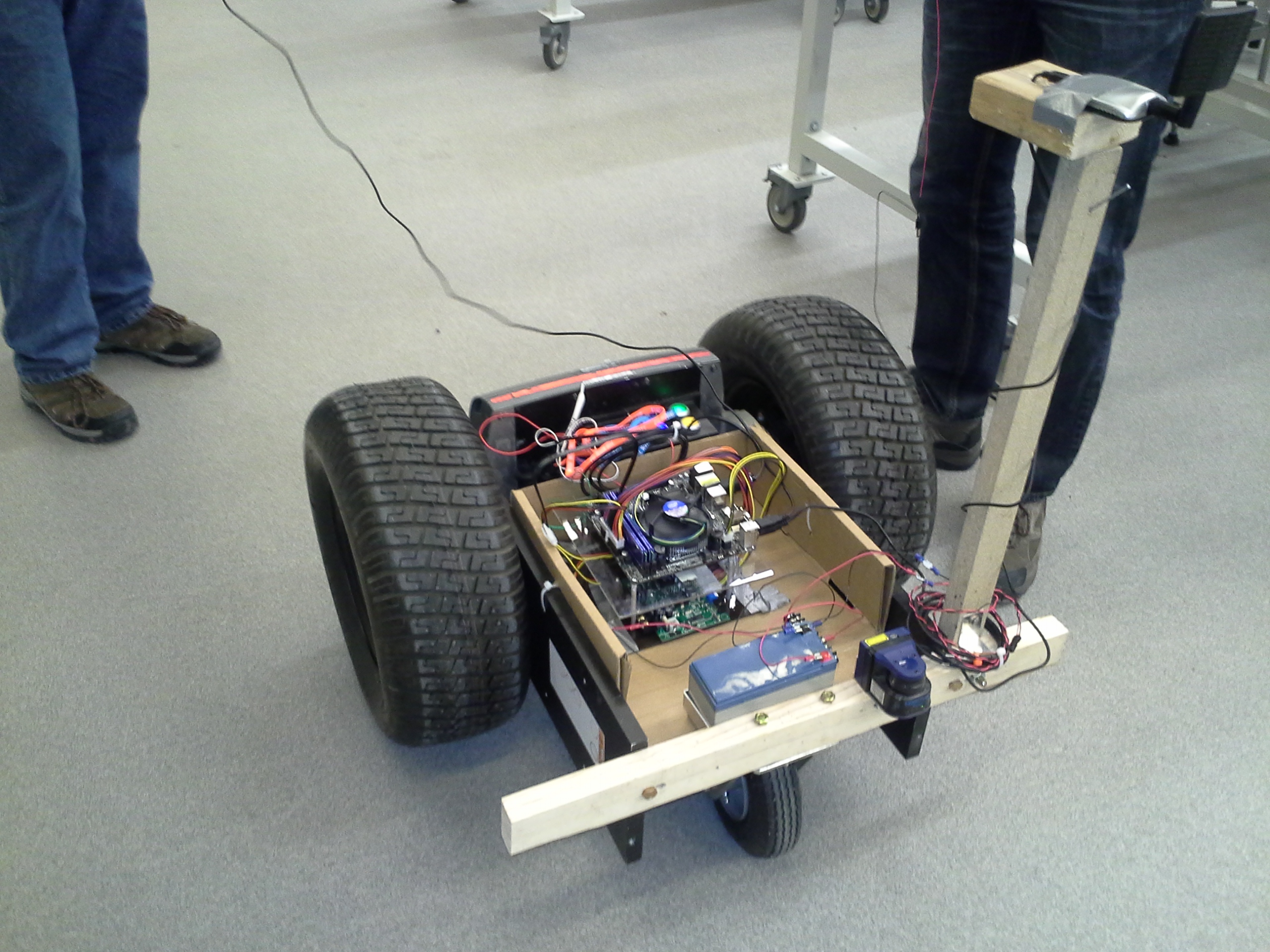

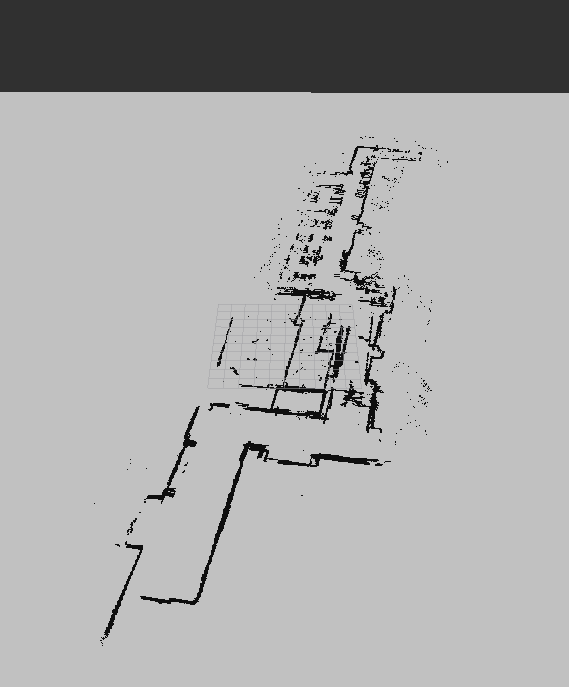

In the summer of 2016, I led a team of three people -- the other two were Han Zhang, currently (March 2018) a Power Electronics Engineer at Astranis, and Ethan Weinberger, a senior at Yale (the guy in the video below) -- that built, in rapid succession over four months, (1) an obstacle avoiding follow-me robot (very similar to what the Piaggio Gita ended up being) to carry items for the elderly and disabled, (2) a prototype autonomously navigation delivery robot similar to what Starship makes, and (3) an early prototype of a robotic LIDAR system to construction sites for automated QA/QC (basically error-detection).

All of these three systems, built on top of the same custom rover base, worked well enough to validate the ideas and our ability to build them. We eventually decided not to work on any of those projects long term for a variety of reasons, but I'm very proud that we built working versions of all of them. In order to pull these projects off, we rewrote a big portion of the ROS navigation stack, built a (leg) gait identification model on top of some Frankensteinian sensor fusion, hacked together bunch of cheap Chinese planar lidars to produce a working 3D Lidar system, harvested a bunch of obstacle avoidance training data off openly accessible security cameras, and, of course, actually built a stable robot that could carry up to 100 pounds and still navigate smoothly in indoor environments, among other things.

Return to robot index ↑

Return to robot index ↑

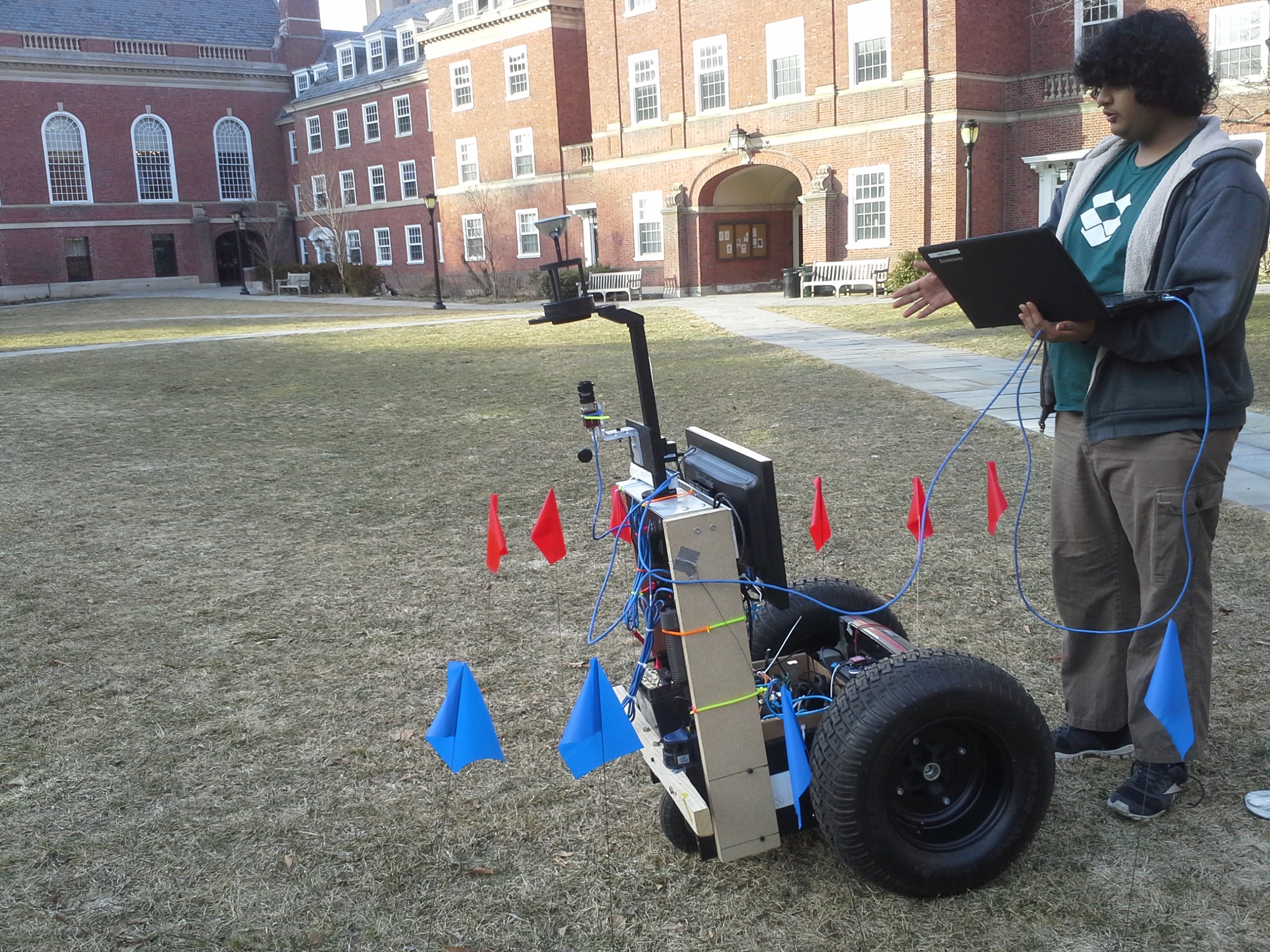

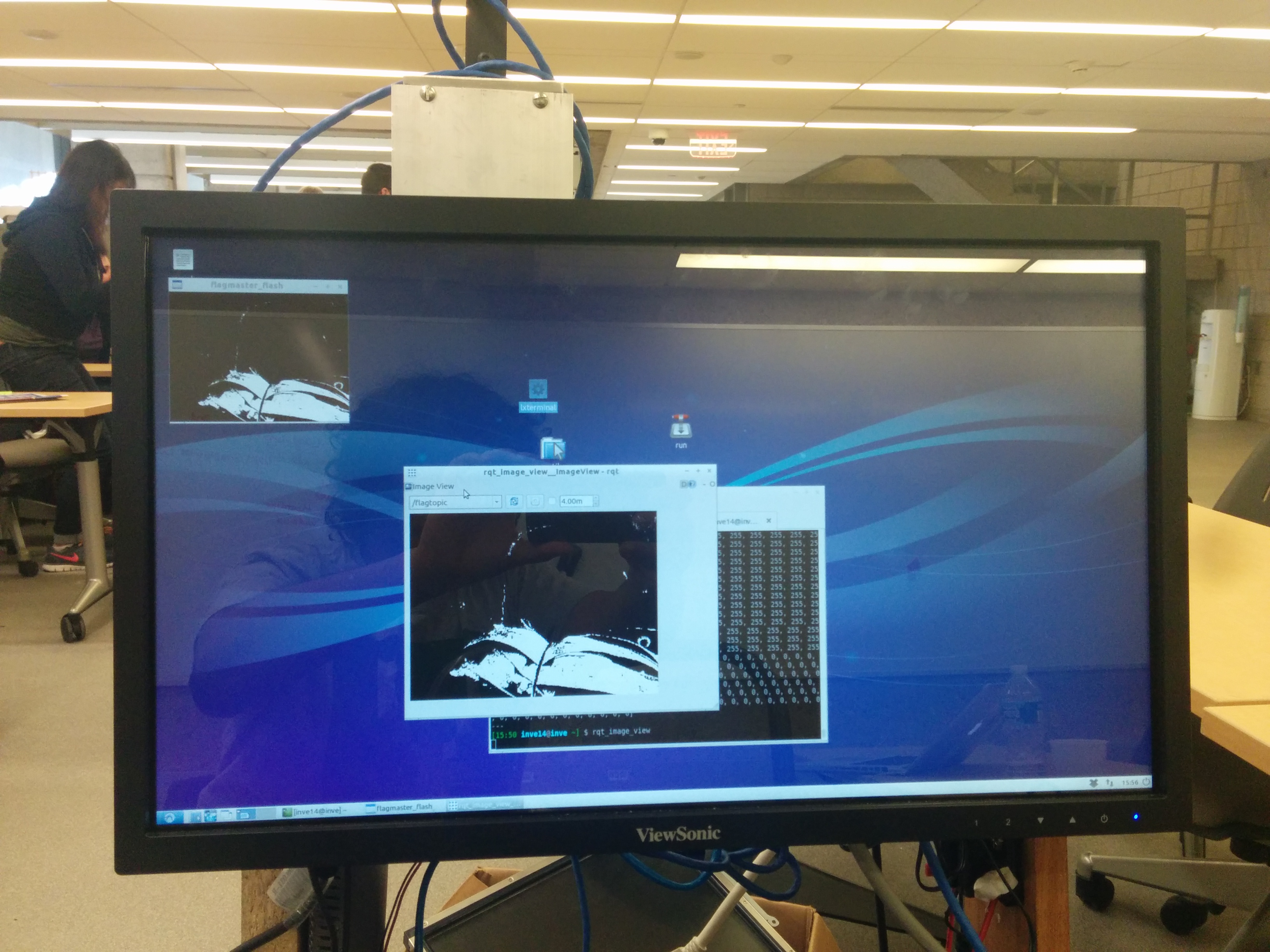

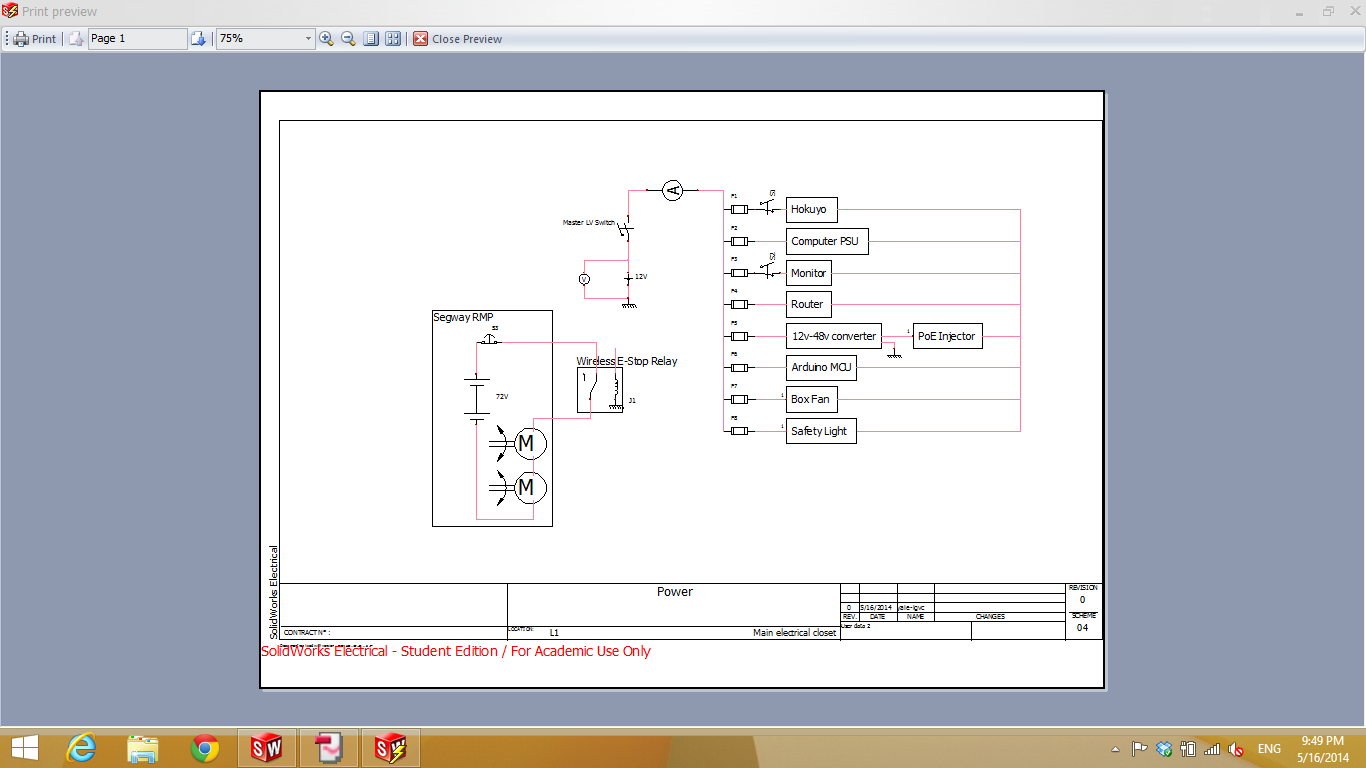

Over my freshman year, and part of the summer, I was the computer vision lead and one of the two navigation programmers for Armstrong, a Segway RMP retrofitted for autonomous navigation that the nascent Yale Undergraduate Intelligent Vehicles entered into the 2014 Intelligent Ground Vehicles Competition (IGVC). IGVC is (or at least was) basically a shitty undergrad version of the DARPA Grand Challenge. We won the Rookie of the Year award in 2014 -- usually teams don't start winning until their third year or later because of inexperience with competition procedure more than anything else, and we were the best of the first-time participants that year. I helped design the bot's overall software stack, wrote most of the computer vision code for flag mapping, line following, and obstacle detection, and spent so many hours debugging the navigation system that seeing a quaternion makes my right eye twitch involuntarily.

That's me, in probably the unhealthiest state I've ever been in.

The team was led by Alex Carrillo, and included Kevin Abbott, Han Zhang, Philip Piper, and Jason Brooks. We spent many hours in the New Haven snow, the Michigan rain, and the West Point sun together testing this thing, hot swapping parts, ideas, and algorithms like we were Apollo 13 astronauts on meth. Very late in the game, I oversaw a swap from a high definition 360 camera-mirror setup, the jewel of our original vision strategy that basically didn't work (and that I'd opposed from the start due to pointless complexity), to a bunch of cheap independent webcams running at extremely downsampled resolutions but high framerates for both line following and flag/obstacle identification. Simple is best, especially in complicated projects.

This project introduced me to relatively big league robotics -- we used multiple lidars for mapping, a full ROS stack, a somewhat custom Simultaneous Localization and Mapping (SLAM) system, separate global and local path planners, as well as a bunch of drivers for components we had to write ourselves. This was to prove useful in the future.

Return to robot index ↑

Return to robot index ↑

This project was, in no uncertain terms, an epic failure. What follows is more post-mortem than log entry, so bear with me.

Midway through my freshman year of college, I started The Flying Bulldogs as an undergraduate unmanned aerial vehicle (UAV) working group. Our initial membership included Han Zhang, Chris Leet, Qingyang 'Q' Chen, and Alfred Tuotol Delle. As summer approached, I dreamed up an ambitious project for our scrappy crew -- a swarm of four drones forming a fault-tolerant distributed task allocation and completion system for arbitrary tasks, upon which we could build the drone application platform to rule them all. The idea was to combine probabilistic best-effort task decomposition and auction based task allocation with some kind of hierarchical learning model for task taxonomy to get the swarm to figure out how to do complex tasks with relatively high level descriptions. Han, Chris, and Q decided to join me in working on this. Casting about for a test use case, we happened upon a professor in the geology department who introduced us to the problem of magnetometric surveying -- collecting data about the metallic composition of vast swathes of land using magnetometers. At the time (late 2013) the state of the art was land-based surveying either via rolling cart of specially rigged car -- planes typically cannot (or at least at the time couldn't) get close enough to the ground at a consistent enough height to get clean data. You would then combine this data with aerial imagery and radar data. This whole process, from collecting to combining, is incredibly time consuming and error-prone, especially if you have less than perfect odometry, which is usually the case. A swarm of drones using redundant surveying to produce a far more accurate dataset that combined magnetometry, camera data, and radar data relatively quickly and without human intervention would make this task orders of magnitude easier, especially if the system were robust to some degree of failure or fault among the drones individually.

Han, Chris, Q, and I believed that this was a good first task to build the swarm system around -- it was purely a survey task that didn't involve object transportation or manipulation, but it was non-trivially complex in terms of how task allocation and fault-tolerance would work. We pitched this idea to the Yale Center for Engineering, Innovation, and Design (CEID), which agreed to fund the project and our living expenses in New Haven over the summer as part of the second annual CEID Summer Fellowship (2014). The CEID wasn't able to completely cover our budget for living expenses -- they usually fund teams of three or less -- so we also took funding from the Yale Computer Science department.

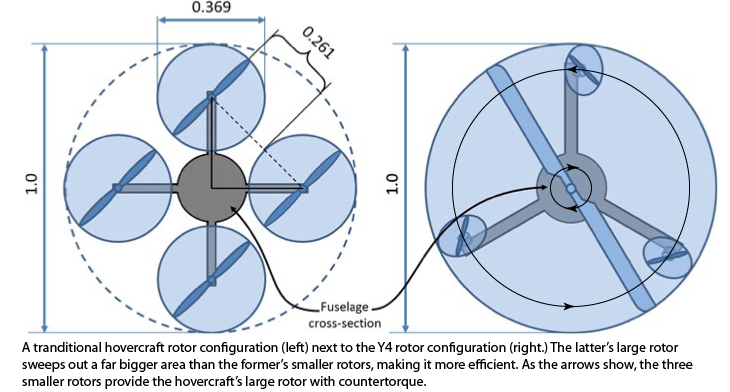

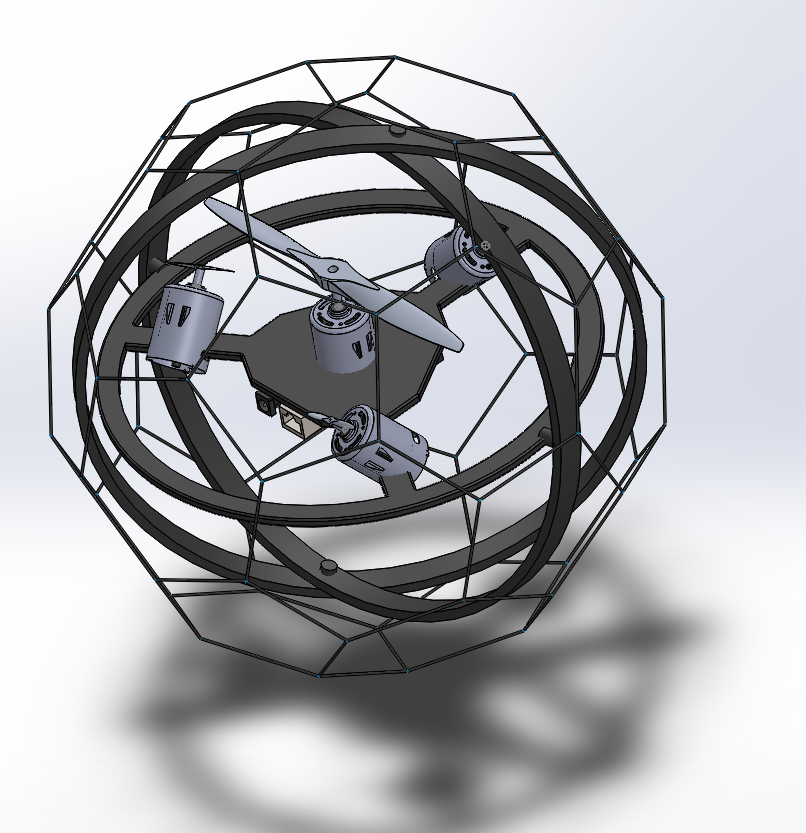

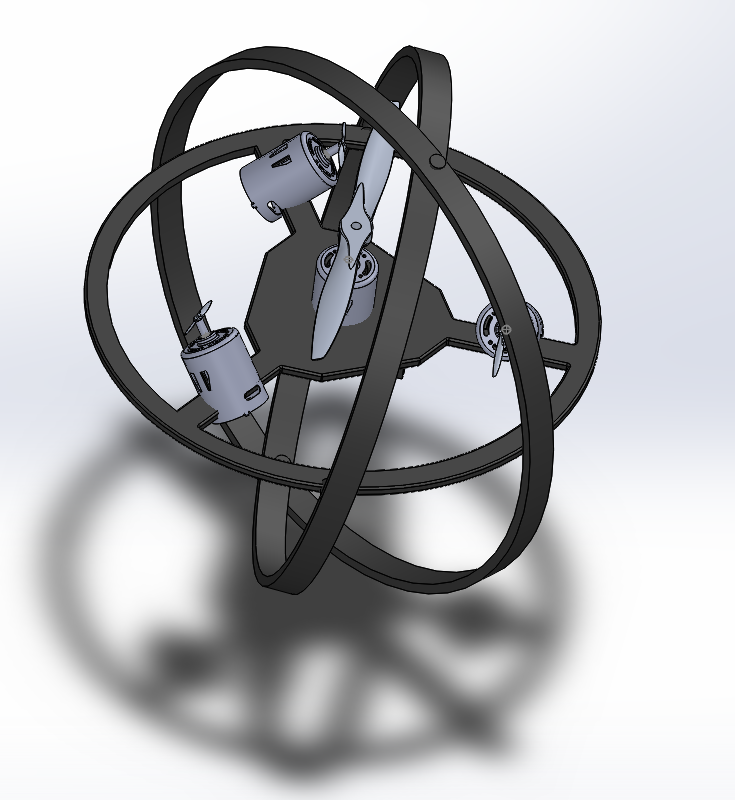

We didn't know it then, but the shit started had already started to hit the rotors. As part of the research we did for our project proposals, we had unearthed work by the researchers Paul E. I. Pounds and Scott Driessens at the University of Queensland on their 'Y4' quadrotor configuration, so named because it is essentially a Y-Shaped tricopter with a large central rotor (as illustrated above). Pounds and co. found that the Y4 configuration was 15-25% more efficient that the usual 'hovercraft-style' motor configurations. As fate would have it, a team led by then Yale-undergrad Stephen Hall was actually working on Y4 configuration quads at the CEID at the same time for a senior project. Given that we were concerned about battery life in the field for our drones, we figured using the Y4 configuration might be a good idea, especially since we could draw on Stephen's recent experiences.

We were also very concerned about the durability of our drones, given that we intended to throw them into harsh environments in close proximity to each other. Most consumer drones are pretty fragile, especially if something hits their rotors. We wanted ours to be robust to collisions and crashes, or at least hardy enough that they wouldn't be totally destroyed out in the field. Safety of operation was also a huge concern -- the Y4 configuration large central rotor can make it far more dangerous in some situations, especially around takeoff. As such, we decided some kind of cage was in order. For a variety of reasons, the magnetometer would have to be on the outside of the cage, so the cage would also have to act as a gimbal, steadying the magnetometer with respect to the ground.

And so was born the GY4S drone -- a Gimballed Y4 Surveying drone. We planned to build a carbon fiber external gimbal covered by a carbon fiber mesh, allowing the drone to survive drone-drone collisions, crashes, and ground contact. We envisioned the drone being able to roll in friendly terrain to save power, then take-off when that was no longer possible, intelligently adapting to and taking advantage of its environment while still being ready for anything. All of this would require scratch-written custom flight software -- there wasn't any open source Y4 stabilization code out there, much less anything for rolling with Y4 -- and a ton of custom mechanical design and fabrication.

Astute readers will have noticed that the GY4S, our seemingly healthy idea baby, was in fact very ill -- it brought with it from the netherworld of imagination the worst case of Not Invented Here syndrome since Steve Ballmer era Microsoft. We were all infected. Our last sane decision on this project was to postpone the carbon fiber mesh cage because of fabrication complexity.

After that, we basically just kept building custom part after custom part, and writing dumb amounts of code that was either mostly redudant with open source projects or too speculative to be usable. We fabricated carbon fiber bearings, built custom RF circuits, wrote our own real-time PID system in Assembly, I think I even wrote custom XBee firmware for unfathomable reasons, and at one point we seriously considered building a custom magnetometer. The guy running the fellowship, Professor Eric Dufresne, gave us good advice about using off-the-shelf stuff that we ignored because we hated the Gantt charts he made us make (yes I know I am very stupid, thank you). To be fair to us, other fellowship mentors fell somewhere between giving us pointless advice and actively hindering our progress for nonsensical reasons. It also didn't help that Yale Environmental Health and Safety department banned drone flying on campus after we'd already been accepted into the fellowship (not because of anything we did, to be clear), drastically limiting the amount of actual testing we could do. Nevertheless, despite the external annoyances, this failure was mostly ours. By the time a number of team members hit a patch of bad personal issues, we were already hosed, lost at sea without a chance at completing anything close to the stated goals of our project. By the end of the fellowship, we had a bunch of somewhat working disparate components that didn't really look like they could be combined to form the system we said we were building.

In retrospect, it's obvious that we should have used off-the-shelf parts and pre-existing software to build working prototypes before even considering custom solutions. We definitely should have used off-the-shelf drones instead of messing with the Y4 configuration. The real differentiator of our project was the high level software, and we should have doubled down on that from day one. We should been better at filtering the signal in the advice we were getting from the noise. We should have found a way to test, and been more forceful with Yale in ensuring that they helped us find that way. Most importantly, we should have been considerably more focused on the project's overall goals without getting caught up in technical curiosities.

The curious bit is that I already knew a lot of that stuff -- back in high school, I was the king of repurposing off-the-shelf parts and software in hacky ways to get stuff done quickly, and I always kept my eyes on the prize. A lot of that, however, was by necessity, and I suspect that I got carried away by the thrill of access to a full machine shop, a lot more funding than I'd had before, and a team of capable people eager to build new things from scratch.

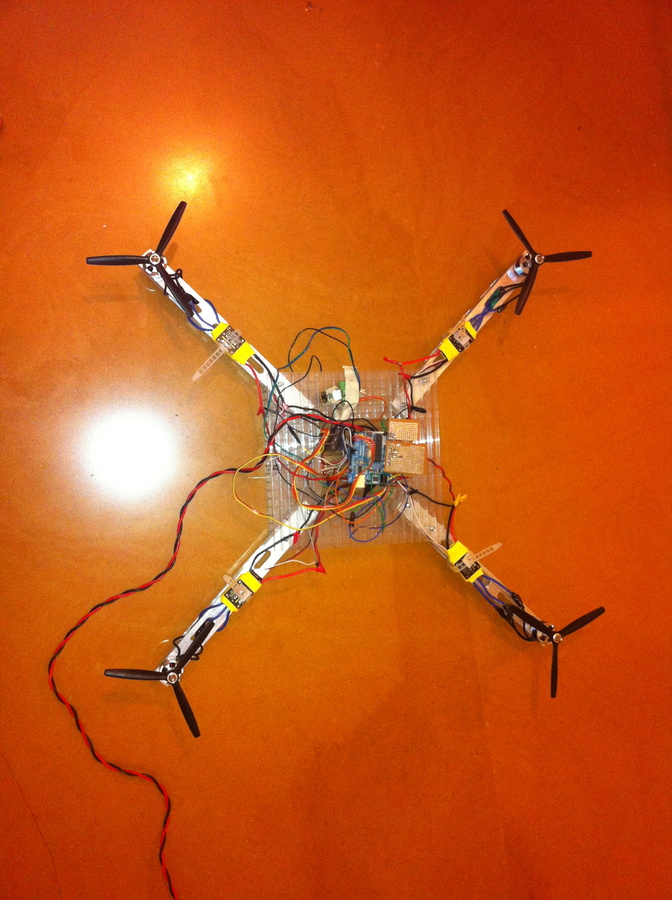

After all these years, all that's left of the project is some bad code, some mangled prototypes, and some lessons. Also, the videos below -- this is the prototype gimbal mounted on a heavily modified commercial 'hovercraft-style' quadcopter demonstrating various modes of movement. This actually looks better than it did in my memory -- maybe we were on to something there...

Return to robot index ↑

A quad-rotor aircraft for filmmaking, among other things. The Drobio quadcopter was actuated by four brushless motors run by an entirely custom Arduino-based stabilization, control, and basic autopilot system; it used a cheap smartphone for live video and connectivity, and was controllable via gesture recognition using a system where you wore a blue glove in front of a laptop webcam on the ground (we called this MagicGlove). I built it with Rahul Mewada.

Return to robot index ↑

A large scale six wheel-drive rover, equipped with a drill attached to a 4-DOF robotic arm controlled by an onboard laptop computer interfaced to an Arduino, and featuring both manual (wireless) control [via Playstation 3 remote] and autonomous waypoint-following operation (using input from a USB GPS module and the laptop's inbuilt camera) modes.

That's me holding the remote. Gasp Rover featured an aluminium-supported wooden frame, with custom-built high-torque motors to support a load of up to 300kg, and a titanium drill spinning at up to 1000 rpm. On the software side, it was powered by custom-built high-power MOSFET motor drivers controlled by an Arduino; autonomous control worked through waypoint-based GPS path-following, with an OpenCV-based avoidance system using input from the camera of an onboard laptop. The software stack was somewhat rudimentary -- it wasn't doing any mapping, just local object avoidance. I built the rover mostly myself, and wrote the code entirely myself. The whole project took about a month.

Return to robot index ↑

Return to robot index ↑

The MiniAture RoVer (MaRv) is the small-scale, four wheeled companion to the Gasp Rover. Built entirely out of aluminum, and featuring an innovative flexible (and shock-absorbing) four-pronged body, a mounted wireless camera, and an oscillating drill, MaRv is designed to remotely explore places that its larger sibling can’t fit into. Each prong of its body hosted a motor-wheel assembly independently connected to the frame via a spring mechanism, giving MaRv the flexibility to clamber efficiently over obstacles. We custom-built the oscillating drill (up to 1000 rpm) and its proportionally controllable motor drivers. The whole thing is manually controlled via wireless remote.

I built MaRv with Raunak Pednekar (pointing above) and Rahul Mewada (holding the remote), pictured above presenting the robot.

Return to robot index ↑

Robotic Hovercraft Design was a simple experimental hover platform, intended to be the base for a future robot.

It featured a wide wooden frame, a single brushless motor with a long-bladed propeller, and a customized ESC. It was never stable enough to use for its intended purpose, but it was a lot of fun to toss around the room. I built RHoDes with Rahul Mewada, Satish Ahuja (holding the propeller above), and Rushabh Mashru.

Return to robot index ↑

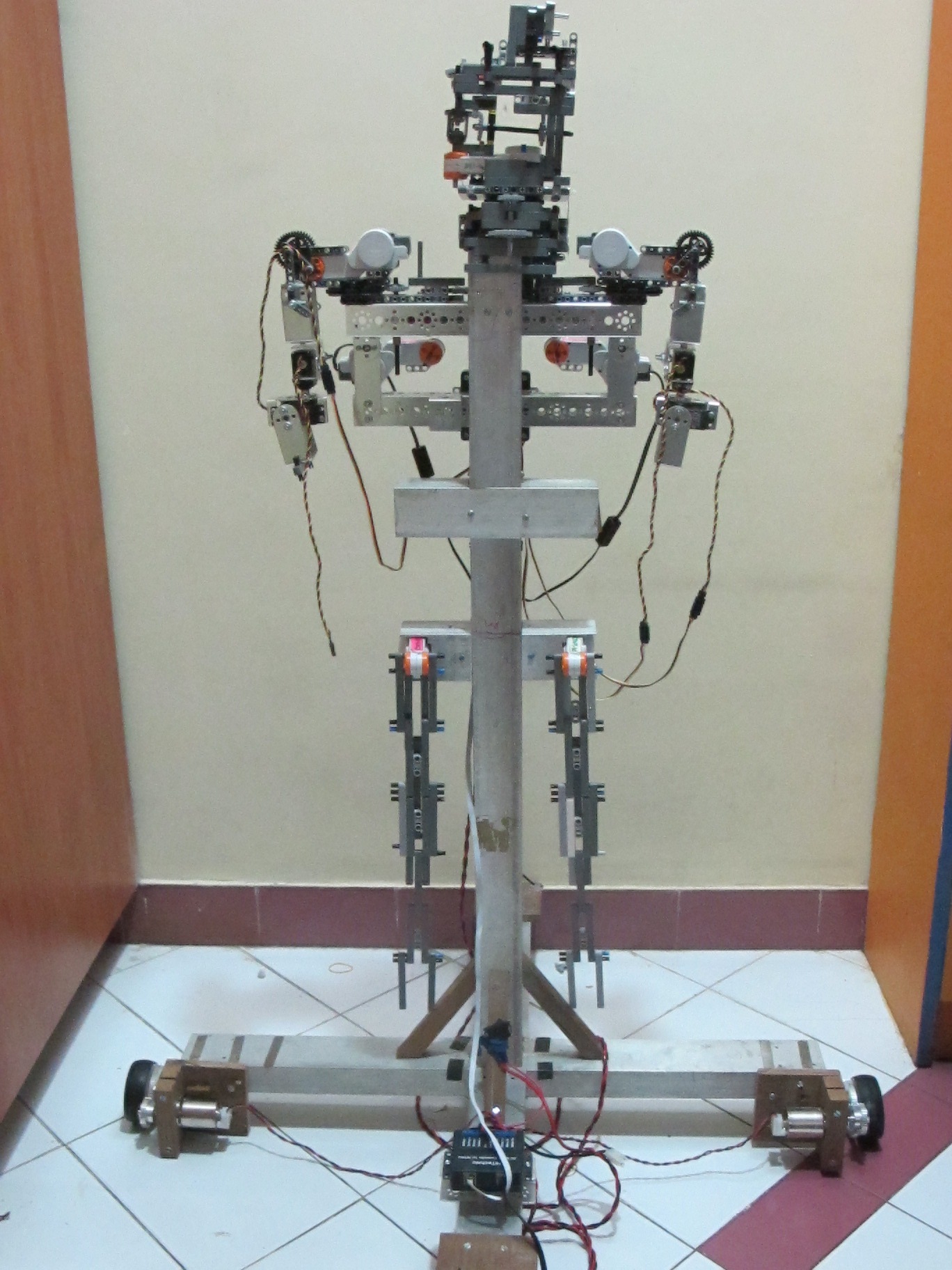

Originally named NERD, the Newton (Robot) Monitoring system is a human-sized robot built to assist the elderly and certain invalids in moving safely around an environment, walking behind them, guiding them, and catching them in case of a fall, as well as picking up and depositing certain objects using its 5-fingered hands (mounted on 6-DOF arms). It was based off a human-scale aluminium frame that could withstand at least 100 kilograms of impact force, with an omnidirectional (near-holonomic) four wheel drive system, and custom-built heavy-duty hi-torque motors that could support and move a total of 150 kilograms in tandem. The dual 6-DOF arms could take a load of up to 500 grams each, and wrists on each hand were attached to palms with 5 individually-controllable wire-actuated fingers. We also built a companion care-suit equipped with a heart-rate sensor, body temperature sensor, and michoprone, all attached to an Arduino connected to a hacked wifi router via TTL serial; communication between the robot and the suit was through experimental NTX wi-fi modules (DI-WIFI) with a custom networking stack. Finally, we built a web app hosted from the router using custom firmware, for remote patient monitoring. I built Newton over 6 months, with Rushabh Mashru; it won first place at the Indian Robot Olympiad (Open Category), and represented India at the World Robot Olympiad, 2011.

Return to robot index ↑

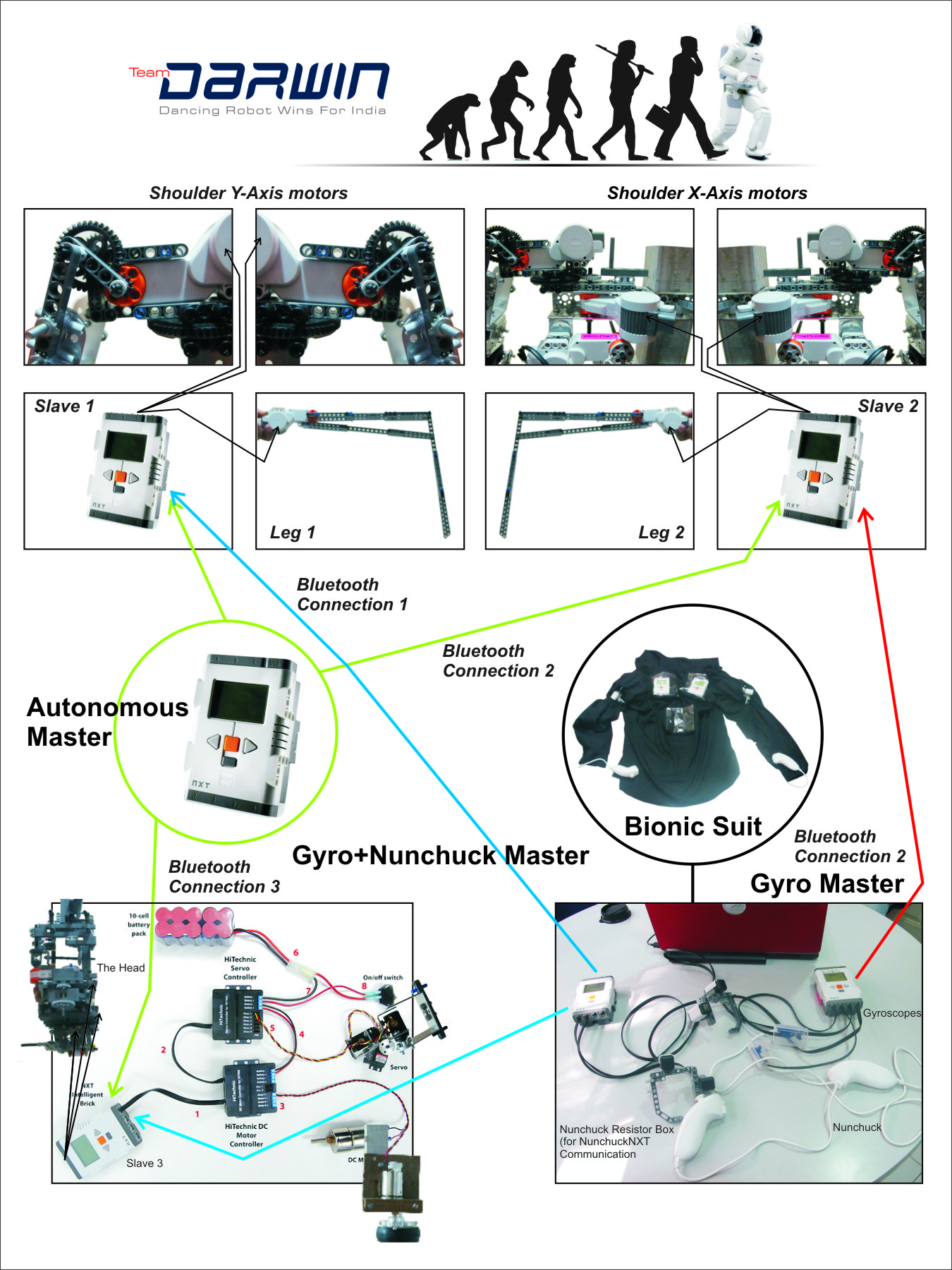

A dancing robot operated using a full-body motion-control suit, that could also record movements and play them back autonomously, DaRWIn won 1st place (Open Category) at the Indian Robot Olympiad and the ‘Best Technical Robot’ Award at the World Robot Olympiad in 2010. It featured dual 6-degrees-of-freedom robotic arms with a heavy duty, self-designed gear system for lifting, and a dual-motor differential drive system to move the robot. The head was equipped with moving eyebrows and eyes, with a left-to-right head shaking motion and 360-degree neck rotation, and two switchable (through 180-degree rotation) faces. The legs were independently actuated for kicking action. The bionic control suit included three NXT controllers, four gyroscopes, and two hacked Wii Nunchucks (combined accelerometer/joysticks). 3 Independent NXT controllers on the robot coordinated as nodes on a BlueTooth P2P network, running self-correcting motor rotation and localization programs as well as communicating with the suit. The robot was capable of full-body motion mirroring, motion recording and playback, and dynamic/random infinite dancing. I built it over six months with Rushabh Mashru and Yash Savani.

A note about the use of the Mindstorms NXT platform -- we would have preferred not to use it, but it was necessary according to the rules of the (Lego-sponsored) competition. In order to get around the limitations of the platform, used modified firmware on the NXT controllers, wrote code exclusively in C, and used our own custom motor controllers and actuators where necessary.

Return to robot index ↑

An Arduino-based, BlueTooth-controlled car, BTCar was run off a processing program with WASD input sending serial commands to an Arduino through a Sparkfun BlueSMIRF, and a self-built transistor-based proportional control enabled motor driver circuit. It was powered by a NiCad battery with a homebuilt charger. I built BTCar by myself as a fun weekend project, and then subsequently used it as a base for a bunch of random computer vision experiments. This was my first time using a 2.4 Ghz wireless module.

Return to robot index ↑

The Soccerbots were a set of two autonomous wireless soccer-playing robots, controlled by a Java program based on input from an overhead camera with real-time image processing, through an Arduino-based USB wireless control system. Soccerbots tied for 1st place at the Robot Soccer contest at IIT Techfest 2010 (held at the Indian Institute of Technology Bombay) -- but this is mostly a joke, because literally none of the robots worked well enough to play, so we all 'won'. Despite the failure of the competition, this was my first exposure to computer vision. I built Soccerbots with Yash Savani and Arnav Tainwala.

Return to robot index ↑

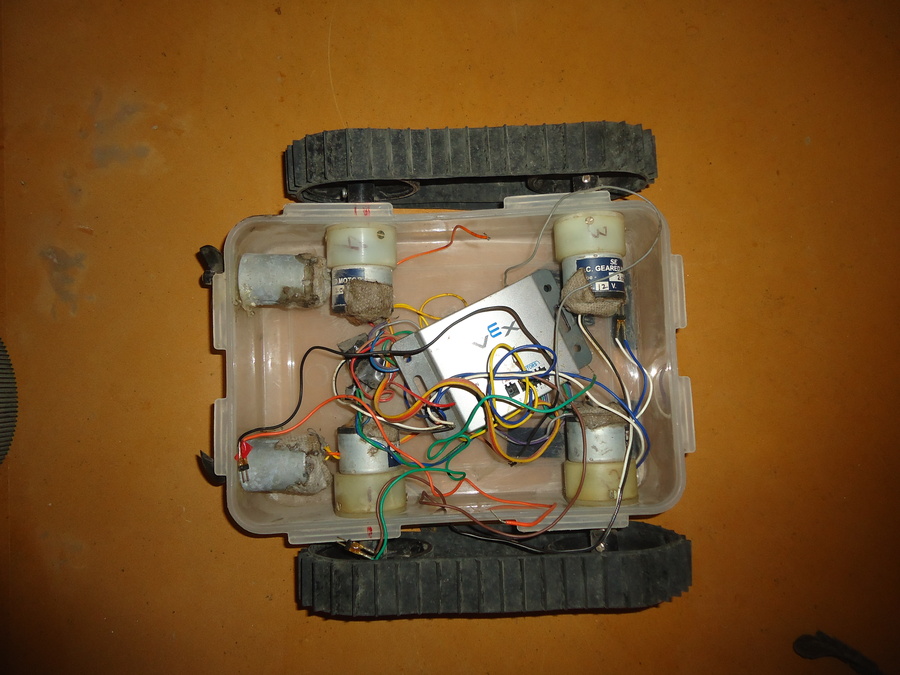

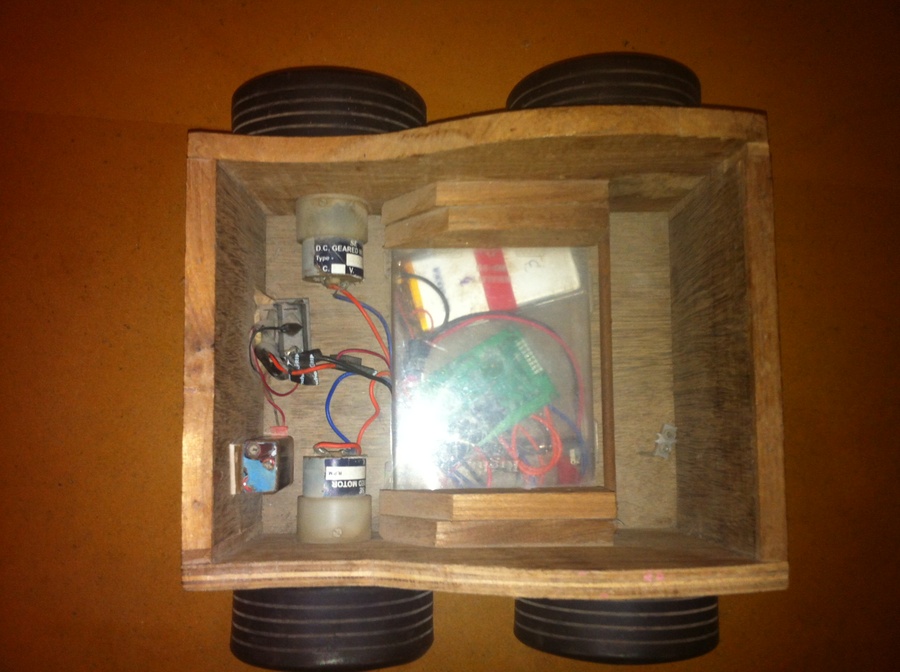

Qenduro is an amphibious robot that reached the semi-finals of the Enduro competition at IIT Techfest 2009 (held at the Indian Institute of Technology Bombay). It featured a tracked four-wheel drive system, dual propellers, and a Vex-based wireless controller. I built it with Hanish Mehta.

Return to robot index ↑Tricksbot09 was a two-wheeled, extremely zippy autonomous robot that we built to compete in the Tricks 2009 robot maze competition at the Indian Institute of Technology, Bombay, where it won second place nationally. It featured a counterweighted chassis and two-wheeled drivetrain with a minimum of resistance, optimized to be able to turn on a dime. Our hair-trigger proportionally-controlled high-speed control system proved the fastest in the competition, when combined with a 3 IR-sensor array for line tracking and ultrasonic sensor-based localization. The brains of the operation was an Arduino-NXT hybrid controller, with the two microcontrollers talking via I2C. I built it with Yash Savani and Arnav Tainwalla.

There are pictures of this somewhere, I just have to find them.

Return to robot index ↑

A submarine robot with a camera mount, the ROV featured a dual-propeller ascent/descent and steering mechanism. The camera mount enabled image capture and live feed, with a 2.4 GHz wireless link to an Arduino that enabled semi-autonomous exploratory behavior, though nothing incredible. I built it with Rushabh Mashru.

Return to robot index ↑

Vertigo is a robot forklift built to compete at IIT Techfest 2008 (held at the Indian Institute of Technology Bombay), featuring a remote-controlled car, built-in track-belt forklift, and Li-Poly batteries with a homebrew charger. I built it with Aditya Sareen and Hanish Mehta.

Return to robot index ↑

A small, agile wooden robot, with a companion lifting mechanism, originally developed for the ‘Prison Break’ contest at IIT Techfest 2008 (held at the Indian Institute of Technology Bombay). The bot featured four independent drive motors, and was operable upside-down, could flip itself over by driving into a wall, and could right itself when fallen on its side. The companion lift mechanism could go three feet into the air, and support upto a kilogram of weight. I built it with Hanish Mehta and Aditya Sareen.

Return to robot index ↑MindWarp was a voice-controlled robot with two 2-axis arms and a wheeled base, controlled by a Visual Basic 6.0 program writing to 8 bits of a parallel port, and featuring a camera in a molded head. I built it with some electronics help from Hiral Sanghvi. I don't have any pictures of this either, but it's very close to my heart because it's the first coding I ever did -- I taught myself Visual Basic from scratch using some online resources, then figured out how to do some signal processing on audio out of a textbook for the rudimentary voice recognition. I didn't build this for any particular reason -- I just really, really wanted to build a voice controlled robot, and so I did. It may be the most unqualified success of any project I've ever done vis-a-vis the original goals.

Return to robot index ↑Dual floating boat robots to play 'robot water polo' with ping pong balls. These robots were made from thermocol and were equipped with two propellers each for differential drive. Ping pong balls were sucked up and shot out by a 30,000rpm suction motor mounted in a PVC pipe. Both bots were controlled using a single manual wired remote designed to be used by a single person. One robot carried the other to the goal at the beginning of each match, allowing the operator (me) to then switch to control whichever of the two bots was closest to a ball at any time. QBots won the ‘Most Innovative Strategy’ and ‘Best Rookie Roboteer’ awards at TRICKS 2007 at the Indian Institute of Technology, Bombay. I built them myself, with some help from my grandparents. I don't have any pictures of this project, but this project was an important one in my life -- it is the first robotics competition I participated in.

Return to robot index ↑

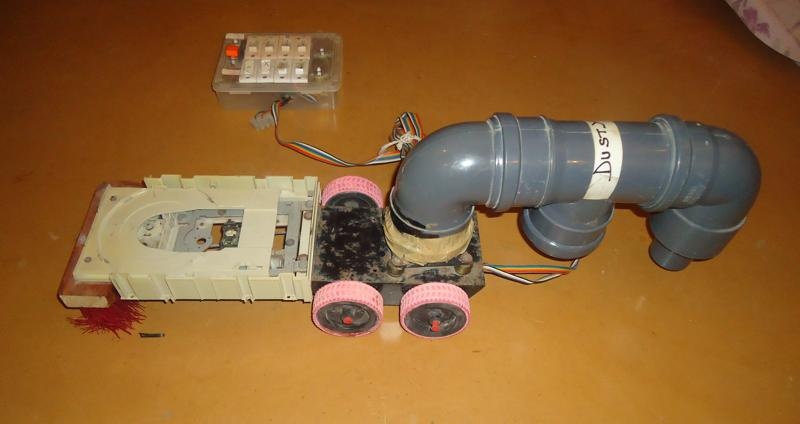

Dusty is a vacuum cleaning manually-controlled 'robot' made almost entirely of junk and spare parts. It has a vacuum system powered by a harvested computer cooling fan, and an automated brush powered by a gutted CD drive. It was one of the first mechatronic things I ever built. I relied on the tutelage of our family's electrician, Shiva, to learn how to power electrical components, wire things, and solder safely.

It won first place at the AIS National Technology Competition, where there was some skepticism about whether I (a sixth-grader) had actually built any significant part of it. For what it's worth, I designed it completely on my own ad did most of the building, save for the parts I needed some help to do for the first time.

Return to robot index ↑Building shit is fun. You should do more of it. If I can help you do that, shoot me an email at soham [at] soh (dot) am.